From the Trenches: Designing a car pooling service for scale in India

From the Trenches is a series on real distributed systems built under real production load where I’ve been fortunate to be part of the team. This installment documents the actual production flow behind a car-pool booking on Ola how a request travels across a bunch of internal services, how allocation service finds drivers, and how allotment services close the loop.

This story comes from a large ride-hailing platform where we shipped Share (car-pool) alongside solo rides. The system had to coordinate price discovery, asynchronous matching, driver device locking, and app notifications while staying compatible with existing apps and infra.

Building car pooling at India scale

The company already had a product to find cabs for simple rides. But the challenge was to build a car pooling product, where multiple customers can be part of the same ongoing ride with the same driver and get dropped off along the way.

Why is this hard?

For starters:

- Multiple riders contend for the same driver/time-slice within sub-second windows.

- We must bound detour (≤ 10–15%) and pickup wait (≤ 3–5 min).

- Price options need to be visible upfront (1-seat/2-seat, normal/express, corporate/non-corp).

- Matching is asynchronous: the app confirms first; the backend allocates later. This is a design constraint because async matching yields better results and the team did not want to build a sync matching flow from the get go specifically for share rides.

Design goals (constraints we held the line on)

Keep the topology. Rideshare is the entry point; Demand Engine (DE) is the source of truth for bookings.

JSON/HTTP contracts for every surface (CAPP/DAPP and internal).

Asynchronous matching with clear states (pending → confirmed | cancelled).

Geohash everywhere. Presence, allocation shards, tracking cache, and candidate search all key off geohash prefixes.

Operational clarity. No hidden transitions: Tracking Service (ESMS) reflects reality; drivers and customers see timely state.

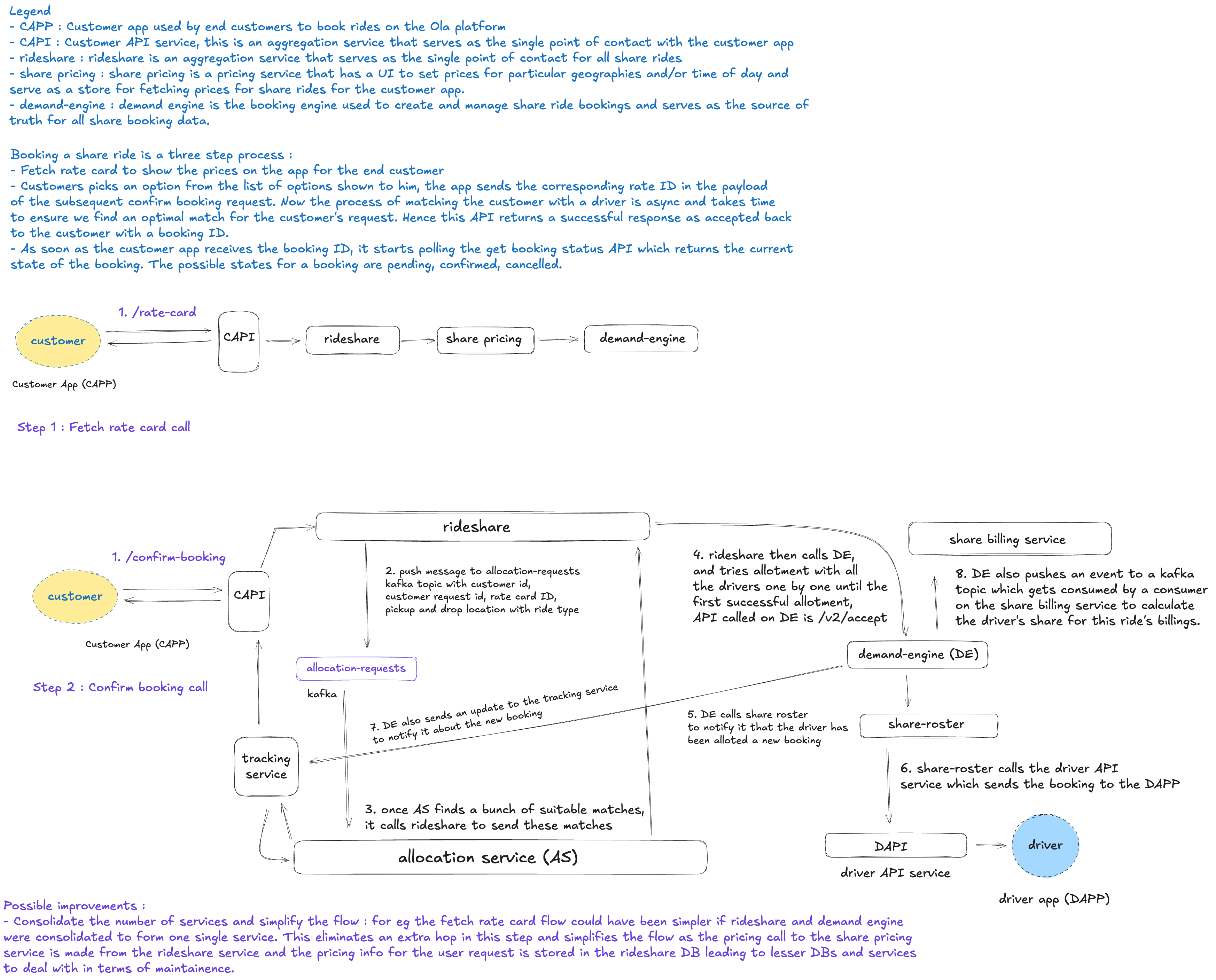

System at a glance

CAPP — Customer app.

CAPI — Public gateway for CAPP.

Rideshare — Aggregator/entry for Share; publishes requests to Allocation; calls DE.

Share Pricing — Computes the eight Share fare variants.

Demand Engine (DE) — Booking store + sanity checks; emits notifications.

Allocation Service (AS) — Builds candidate drivers for a booking.

Tracking Service (ESMS) — Read-through cache of current booking state for polling.

Roster — Driver profile/assignment; talks to DAPI for device lock; updates TS.

DAPP/DAPI — Driver app and its API (MQTT + long-poll mirror).

Kafka (allocationrequests) — Rideshare → Allocation bridge.

Architecture

Geospatial backbone.

Presence/requests carry geohash7 (≈100–150 m).

Shards are geohash prefix(5/6) (city zone sized) for Allocation ownership and Kafka partitioning.

When searching, we always include center + 8 neighbors; at low density we fall back to the parent geohash6 + neighbors.

Tracking reads are geohash-aware so adjacent cells don’t “blink” at boundaries.

Happy-path flow

Step 1 — Rate card (price discovery)

CAPP → CAPI → Rideshare → Share Pricing → DE (eligibility) & OS Lite (coupons)

POST /share/rate-card Content-Type: application/json { “rider_id”: “r123”, “pickup”: {“lat”: 12.9716, “lng”: 77.5946, “geohash”: “tdr5re4”}, “dropoff”: {“lat”: 12.9352, “lng”: 77.6245, “geohash”: “tdr5pvy”}, “time”: “2025-09-03T09:10:00Z”, “corp”: false }

Response — eight combinations, one place for coupons:

{ “rate_card_id”: “rc_7f2a”, “options”: [ {“seats”:1,“mode”:“normal”,“corp”:false,“price”:129,“currency”:“INR”}, {“seats”:1,“mode”:“express”,“corp”:false,“price”:149,“currency”:“INR”}, {“seats”:2,“mode”:“normal”,“corp”:false,“price”:178,“currency”:“INR”}, {“seats”:2,“mode”:“express”,“corp”:false,“price”:198,“currency”:“INR”}, {“seats”:1,“mode”:“normal”,“corp”:true,“price”:125,“currency”:“INR”}, {“seats”:1,“mode”:“express”,“corp”:true,“price”:145,“currency”:“INR”}, {“seats”:2,“mode”:“normal”,“corp”:true,“price”:172,“currency”:“INR”}, {“seats”:2,“mode”:“express”,“corp”:true,“price”:192,“currency”:“INR”} ], “applied_coupons”: [{“code”:“OSLITE10”,“value”:-10}] }

Step 2 — Confirm booking (async matching)

CAPP → CAPI → Rideshare Rideshare (a) writes pending into Tracking, (b) publishes to Kafka allocationrequests, (c) returns 202 Accepted with booking_id.

POST /share/confirm-booking Content-Type: application/json { “rider_id”:“r123”, “rate_card_id”:“rc_7f2a”, “choice”:{“seats”:1,“mode”:“express”,“corp”:false}, “pickup”:{“lat”:12.9716,“lng”:77.5946,“geohash”:“tdr5re4”}, “dropoff”:{“lat”:12.9352,“lng”:77.6245,“geohash”:“tdr5pvy”} }

Message put on Kafka (allocationrequests)

{ “type”:“share.booking.requested”, “booking_id”:“b_123”, “rider_id”:“r123”, “pickup”:{“lat”:12.9716,“lng”:77.5946,“geohash”:“tdr5re4”}, “dropoff”:{“lat”:12.9352,“lng”:77.6245,“geohash”:“tdr5pvy”}, “choice”:{“seats”:1,“mode”:“express”,“corp”:false}, “shard”:“tdr5r” // geohash prefix(5) }

Allocation Service consumes the shard’s partition, computes candidates (center + neighbors, detour/ETA/fairness), and calls back Rideshare:

POST /share/internal/allocation/candidates Content-Type: application/json { “booking_id”:“b_123”, “candidates”:[ {“driver_id”:“d_456”,“pickup_eta_sec”:180,“detour_pct”:0.12,“score”:0.71}, {“driver_id”:“d_789”,“pickup_eta_sec”:220,“detour_pct”:0.10,“score”:0.75} ] }

Rideshare → DE: create then accept.

POST /de/v1/booking { “booking_id”:“b_123”, “rider_id”:“r123”, “driver_id”:“d_456”, “fare”:{“price”:129,“currency”:“INR”}, “corp”:false, “tax_context”:{“gst_mode”:“non_corp”}, “trip_rules”: 1 // bitmask: 1=cash,2=otp,4=hotspot }

POST /de/v2/accept { “booking_id”:“b_123” }

Roster & DAPP Roster records the assignment, locks the device via DAPI on the first booking, and DE pushes MQTT to DAPP:

POST /dapi/v1/device/lock { “driver_id”:“d_456”,“booking_id”:“b_123”,“reason”:“first_booking” }

{ “type”:“booking_assigned”,“booking_id”:“b_123”,“driver_id”:“d_456”,“pickup_eta_sec”:180 }

Tracking is updated to confirmed so CAPP polling flips from pending to assigned.

Step 3 — Status polling GET /share/booking-status?booking_id=b_123

{ “booking_id”:“b_123”, “state”:“confirmed”, “driver”:{“id”:“d_456”,“eta_sec”:180}, “fare”:{“price”:129,“currency”:“INR”} }

States: pending → confirmed | cancelled.

Core concepts (naming we used on whiteboards)

Rate card: Eight Share fare variants per O/D/time/product; coupons applied via OS Lite.

Booking: Lifecycle lives in DE; Tracking reflects state for fast reads.

Allocation candidate: (driver_id, eta, detour_pct, score) computed by shard owner using geohash7 + neighbors.

Shard (allocation): geohash prefix(5/6); also the Kafka partition key for locality and ordering.

Trip rules bitmask: 1=cash, 2=otp_enabled, 4=hotspot (e.g., 3 = cash+OTP).

DAPP delivery: MQTT push; Longpoller mirrors the stream for flaky networks.

Final API Endpoints Surface Endpoint Method Description CAPI /share/rate-card POST Returns eight pricing options for Share CAPI /share/confirm-booking POST Starts async matching; returns booking_id (202 Accepted) CAPI /share/booking-status GET Current state: pending/confirmed/cancelled Rideshare (internal) /share/internal/allocation/candidates POST AS → Rideshare with driver candidates DE /de/v1/booking POST Create booking in system of record DE /de/v2/accept POST Accept/lock selected driver in DE Roster /share-roster/v1/driver/booking POST Notify roster of new driver assignment DAPI /dapi/v1/device/lock POST Lock booking on driver device (first booking of trip)

Kafka

Topic: allocationrequests (partition key = geohash prefix(5/6)).

Geohash usage (the details you’ll be asked about)

Presence & requests: geohash7 default; geohash8 allowed in CBDs.

Neighbors always: Search set = {center} ∪ neighbors(center); if candidates < N, fallback to parent(6) ∪ neighbors(parent).

Tracking reads: We read the current cell + neighbors so riders on borders don’t see state “blink.”

Why geohash (over H3 here): prefix sharding works well with Kafka and string keys we already had; the neighbor fix neutralizes boundary artifacts at our densities.

Runtime behavior & extensibility (quick notes)

Everything JSON/HTTP. Easier on integration and debugging; MQTT delivery for DAPP unchanged.

Async matching as a contract. confirm-booking returns accepted immediately; the app polls Tracking.

Pricing knobs live outside code. Ratecard data and coupons flow through Share Pricing, not the allocation hot path.

Geospatial knobs are flags. Neighbor depth, fallback precision, and shard ownership are runtime-tunable per city.

Taxes & corp: Non-corp GST is computed on create; corporate adjustments happen on accept (as in the original flow).

Tolls: DAPP → Rideshare → DE → share billing service with toll_id/timestamp; BPD resolves toll price.

Operational notes (with a staff hat on)

SLOs we watched: assign_latency_p95 per shard, presence_staleness_p95, and the boring one—status propagation lag from DE → Tracking (you’ll hear about this first on Twitter).

Shard health: If a geohash-prefix partition falls behind, we temporarily reduce neighbor expansion and prefer solo until lag clears.

Device lock: The only “critical section” is the driver’s device lock in Roster/DAPI. Keep it narrow; time-box the lock; never block the whole shard on it.

Idempotency: Write APIs accept retries (mobile networks are messy). Dedup keys are per route + rider/driver.

Observability: Every transition (requested, candidates, accepted) emits a structured event; Tracking carries correlation IDs so CAPP/DAPP logs line up with DE facts.

Sample payloads (for completeness)

Allocation request (Kafka message)

{ “type”:“share.booking.requested”, “booking_id”:“b_123”, “rider_id”:“r123”, “pickup”:{“lat”:12.9716,“lng”:77.5946,“geohash”:“tdr5re4”}, “dropoff”:{“lat”:12.9352,“lng”:77.6245,“geohash”:“tdr5pvy”}, “choice”:{“seats”:1,“mode”:“express”,“corp”:false}, “shard”:“tdr5r” }

Tracking status (CAPP polling)

{ “booking_id”:“b_123”, “state”:“pending”, “updated_at”:“2025-09-03T09:10:12Z” }

Driver assignment (MQTT to DAPP)

{ “type”:“booking_assigned”,“booking_id”:“b_123”,“driver_id”:“d_456”,“pickup_eta_sec”:180 }

What was the max scale the system experienced?

This design scaled up to 2k requests per second on the confirm booking path and 6k requests per second on the fetch rate card and get booking status endpoints.